These days, barely a week goes by without someone uttering a prediction about the state of AI. Last November, Nvidia’s CEO Jensen Huang said AI will be ‘fairly competitive’ with humans in 5 years. In February he said that we won’t need programmers anymore because computers in the future will just be programmed by human language. Elon musk famously has predicted that fully autonomous driving will be available “next year” every year for a couple of years.

So what to do of these predictions? Why are otherwise smart people coming up with such predictions even though they are constantly disproven? Or are all these just marketing plays to keep investors happy?

My suggestion is to not take these predictions at face value but rather interpret the stated time interval as an expression of how much uncertainty we have about achieving the predicted outcome.

So “this years” can be read to mean “we know essentially how to do it, we need to build it.” A couple of months is a (fast) development cycle for a complex product. Probably there aren’t that many open questions here. (Well, unless you’re Elon Musk and you’re overestimating your capabilities.)

“Within the next 1-2 years” means that there might be a path but a few things need to fall into place, and you have an idea where the answers could come from. But they still need to happen, and then you need to build it.

“Within the next 5 years” sounds to me like there really is something missing and we need to find that, and then maybe it’s another 2 years of building it.

“In the next 10 years” essentially means that there are so many unknowns and missing pieces that it could be built in 10 years, but we would need to really be lucky.

“In 20 years” means you have no idea how to get there but hey, who knows?

Let’s face it, technological progress is very hard to predict. Every few years, an invention comes along that fundamentally changes our everyday lives. Like the car, the television, or the smartphone. Sometimes there are signs. Apple had already built the iPod which was a similar form factor, so maybe you could extrapolate that you could put a whole phone into that, but some things were still missing. But technology does not grow linearly but makes hard to predict jumps followed by more predictable intervals of continual improvement.

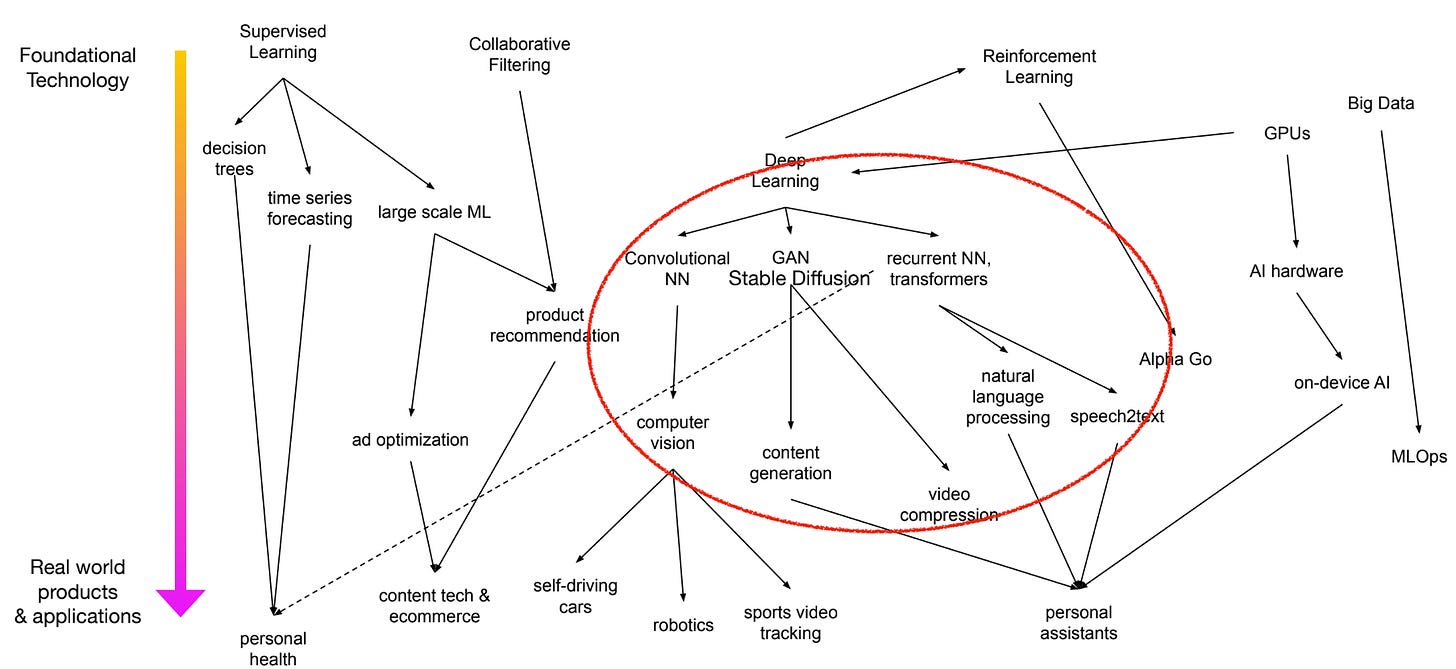

Even when you cannot predict when it will happen, you can take a look at the current technology landscape and then try to figure out how potential breakthroughs might percolate down WHEN they happen. Here’s a figure I made at the end of 2020 for a talk, and interestingly, personal assistants are popping up as a potential result if NLP and more energy efficient AI hardware become a reality. These days it seems companies are (so far unsuccessfully) try to put this together instead relying on fast mobile Internet, but Apple is working on putting more AI compute into their next generation of chips

So when is AGI going to happen? I don’t know. We have some technology that we didn’t have before, but I’d say there are some question marks whether LLMs can really be made to reason, or stop being so full of themselves. If you believe the required steps are still close, you ‘re probably in the 2-5 year camp, else you’re more in the 10 year even 20 year camp. I’m personally more in the 10-20 year camp, but again, that’s just an expression of how I currently assess what’s still missing.

I’ve recently closed my Twitter account, but you can now follow me on Threads.